kruci: Post-mortem of a UI library

I love doing experiments - side projects to my side projects, for fun or out of necessity.

Sometimes those experiments and end up in something useful, other times - not so much. Let me tell you about one of the latter projects - about terminals, user interfaces, and trade-offs.

- > Water

- > Waste

- > Wager

- > Wisdom

- > Wisdom - Widgets

- > Wisdom - Layouts

- > Summary

Water

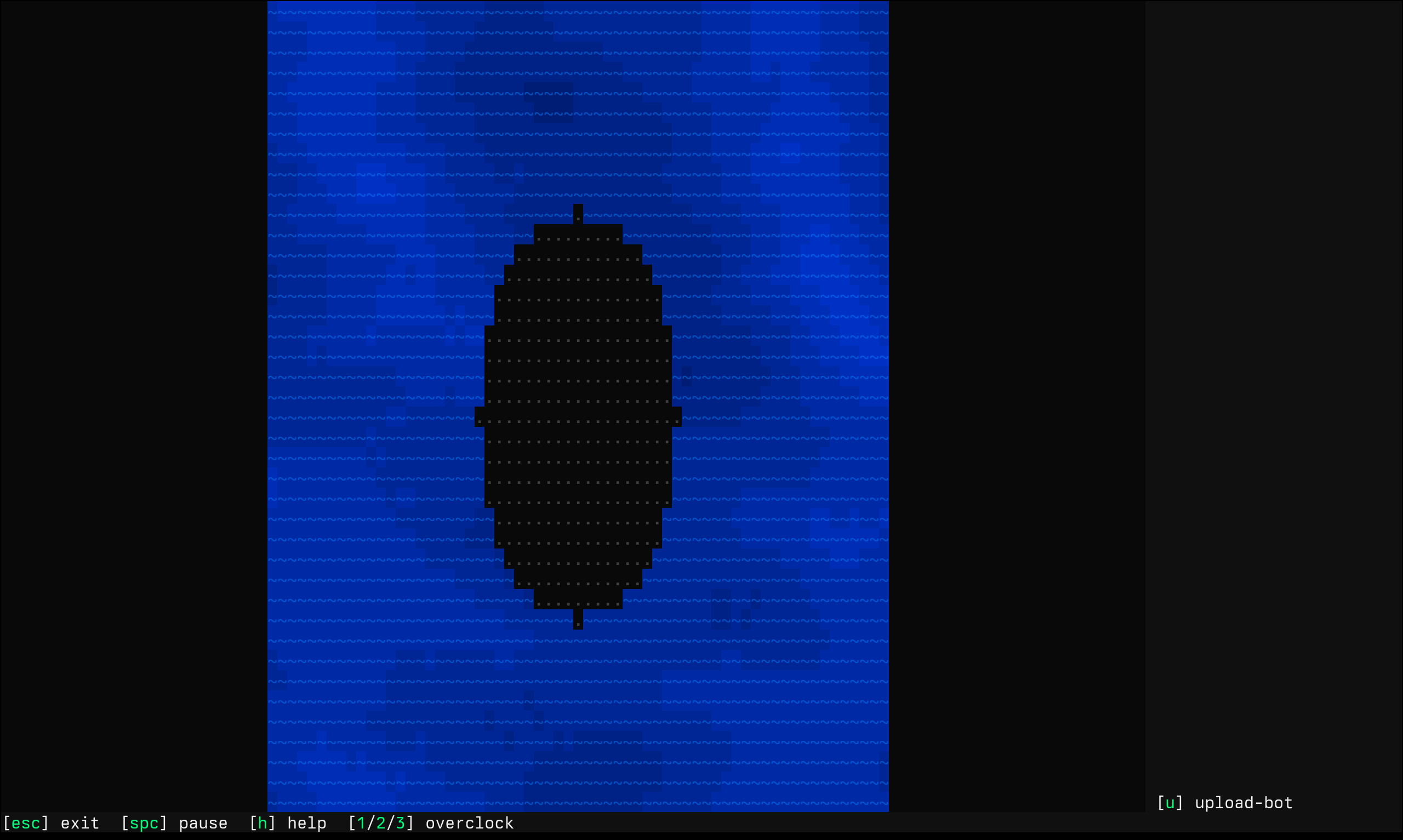

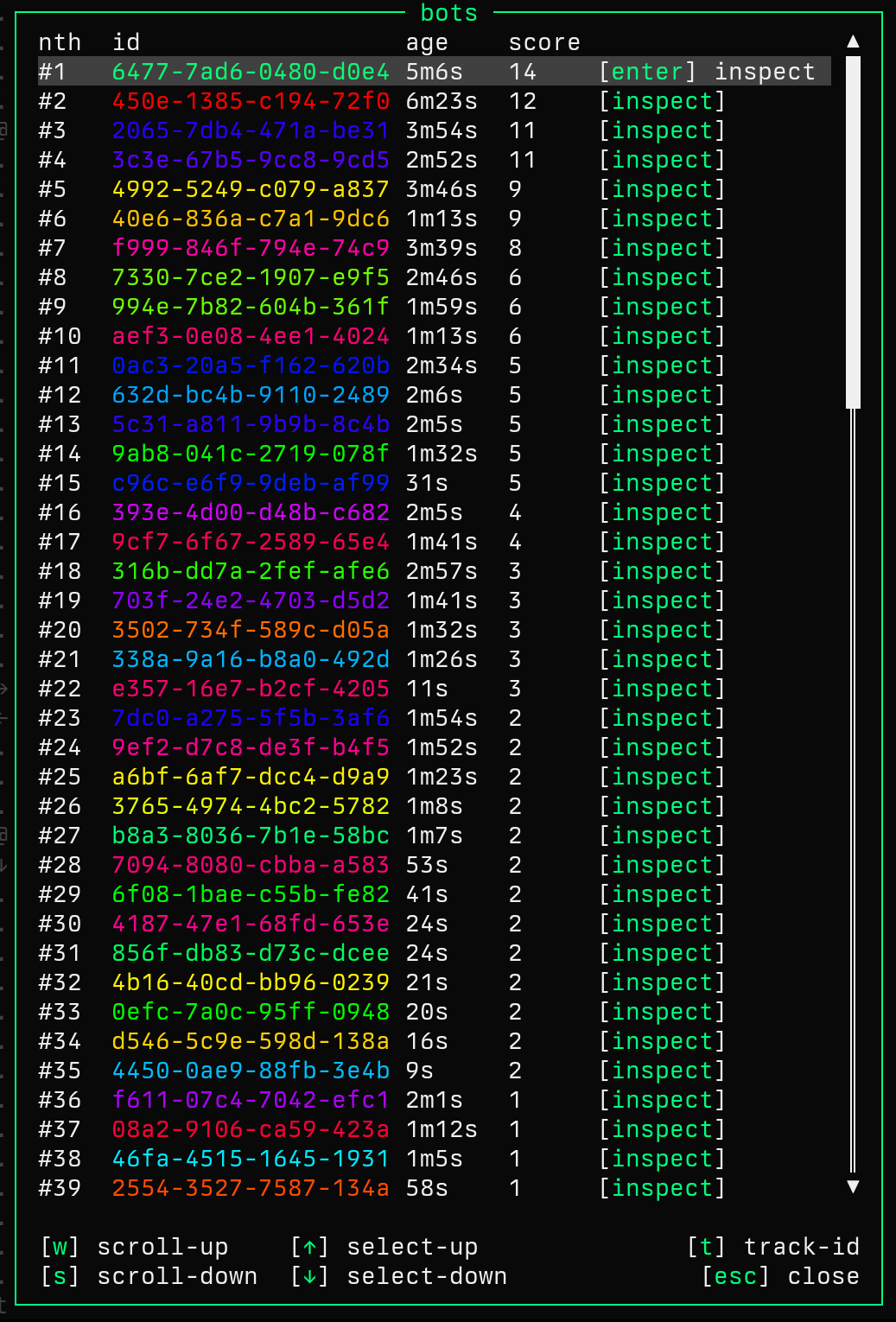

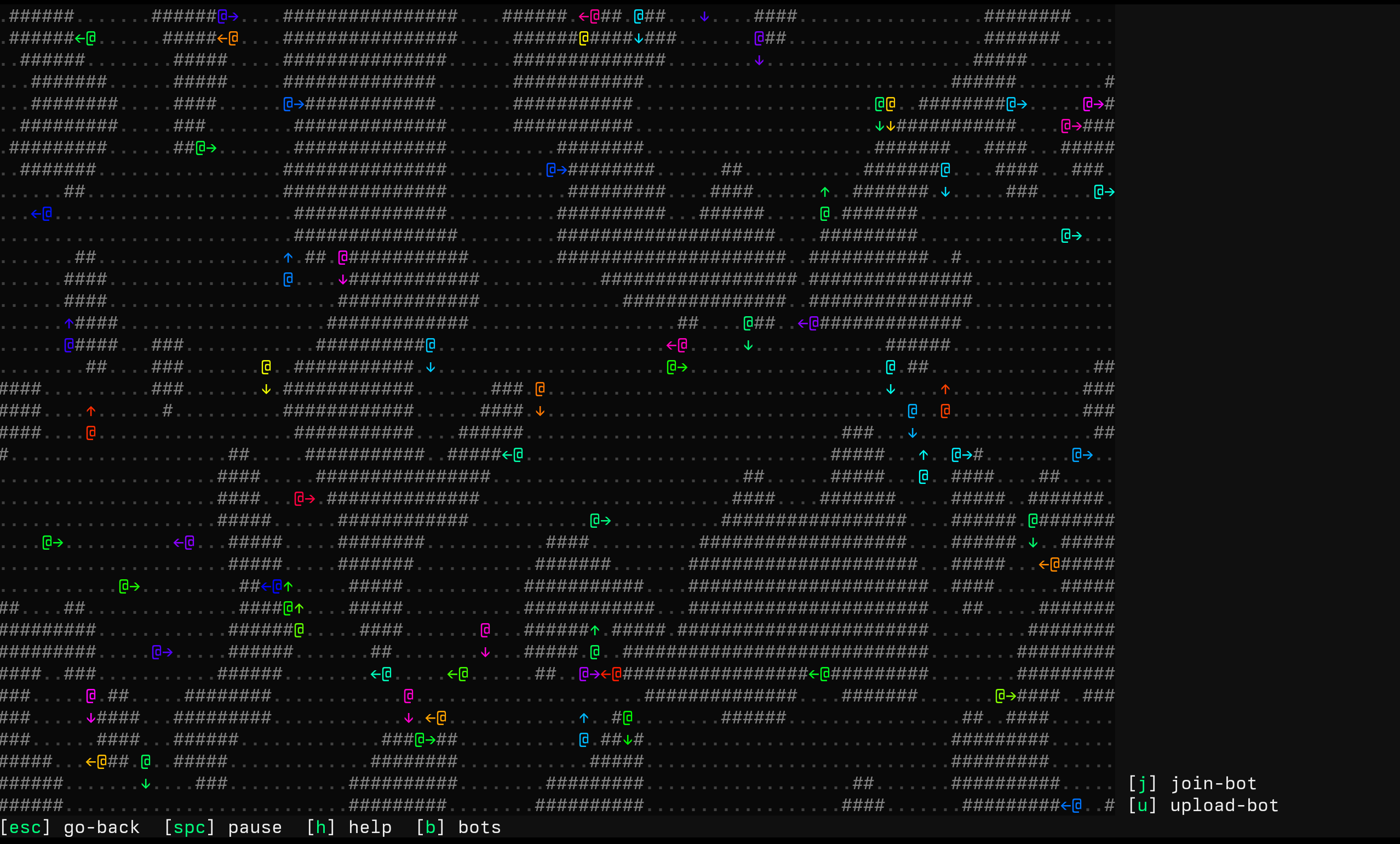

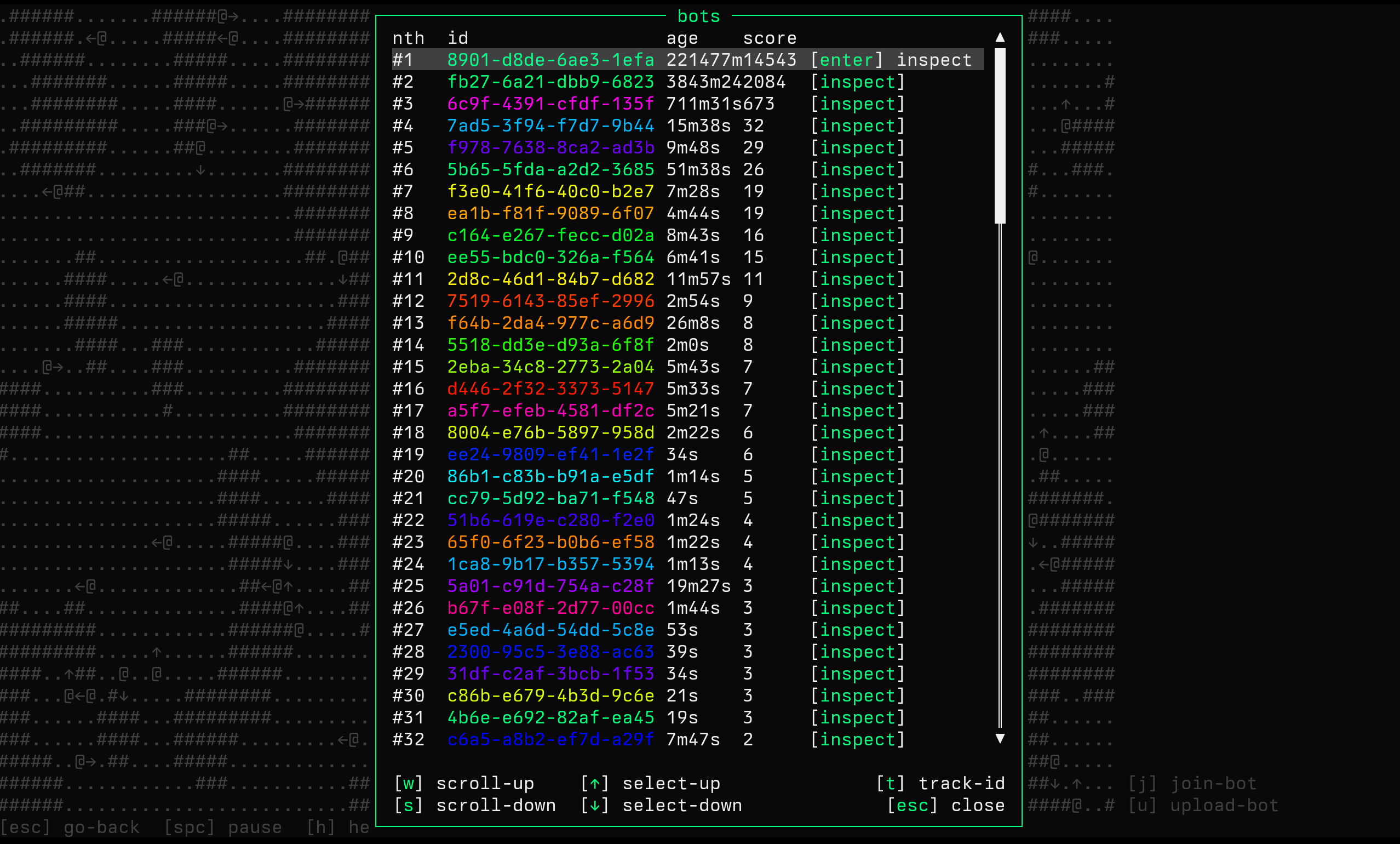

My programming spare time is dedicated mostly towards kartoffels, a game of mine where you're implementing firmwares for tiny robots:

For the next version I've started to work on a new challenge, a map

where your bot is stranded on an island - as the player, you have to

implement a bot that collects some nearby rocks and arranges them into

a HELP ME text:

Since ocean is rarely stationary, I thought it'd be nice to add waves as a visual gimmick - inspired by a shader, I went with:

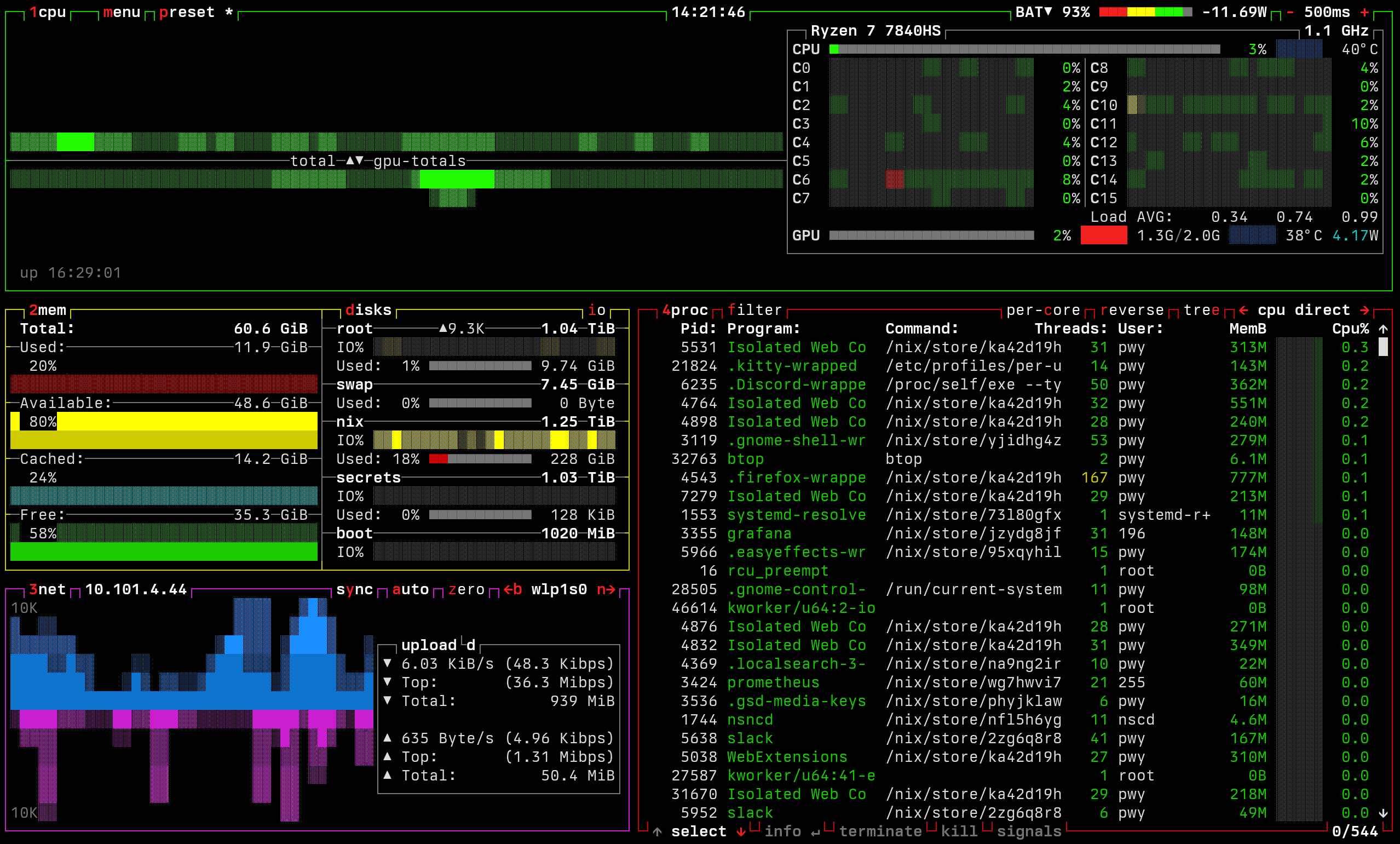

... then I ran htop and saw that damn, those wave

calculations are heavy!

For each ocean tile you have to calculate a couple of sines and exponentials - it's not the end of the world for a modern CPU, but it does become problematic in my case, because the game is rendered entirely on the server, players are just SSH terminals.

So it's actually a couple of sines and exponentials for each pixel, for each frame, for each player.

Fortunately, eyes can be easily fooled. Even though the ocean seems alive, it's not like the entire ocean changes between every two consecutive frames - so, intuitively, we shouldn't be forced to recalculate everything from scratch all the time.

This is not a novel thought - games have been amortizing calculations across frames for years now, for better or for worse.

Eventually I've been able to get an acceptable frame time by keeping a buffer that's updated both less frequently than the main frame rate and merely stochastically - and this got me thinking:

Are there any other places where I'm wasting CPU cycles?

Waste

kartoffels delegates drawing to Ratatui, a Rust crate for building terminal user interfaces. It works in immediate mode, which means that every frame you're supposed to draw everything you want to see on the screen, always from scratch:

fn run(terminal: &mut DefaultTerminal) -> Result<()> { loop { terminal.draw(render)?; if should_quit()? { break; } } Ok(()) } // Always starts with an empty frame fn render(frame: &mut Frame) { let greeting = Paragraph::new("Hello World! (press 'q' to quit)"); frame.render_widget(greeting, frame.area()); }

After render() returns, the frame is painted - but it

can't be sent to the user's terminal yet, since terminals can be slow

and some could have a hard time processing a continuous stream of

frames, especially with

ANSI escape codes

floating around.

Luckily, since a new frame is usually similar to the previous one,

instead of sending everything, we can send just the difference - a

couple of instructions such as go to (30,5),

overwrite with 'vsauce'.

This is essentially a video codec, but for text - and Ratatui provides one out of the box:

// Takes two buffers, returns a list of differences between them (x, y, char). pub fn diff(&self, other: &Self) -> Vec<(u16, u16, &Cell)> { /* ... */ let mut updates = vec![]; let buffers = next_buffer.iter().zip(previous_buffer.iter()).enumerate(); for (i, (current, previous)) in buffers { if current != previous { let (x, y) = self.pos_of(i); updates.push((x, y, &next_buffer[i])); } /* ... */ } updates }

Even though this codec is rather minimalistic, what I've found out is that for some views - for instance this welcome screen you saw at the beginning - this diffing can take half of the frame time!

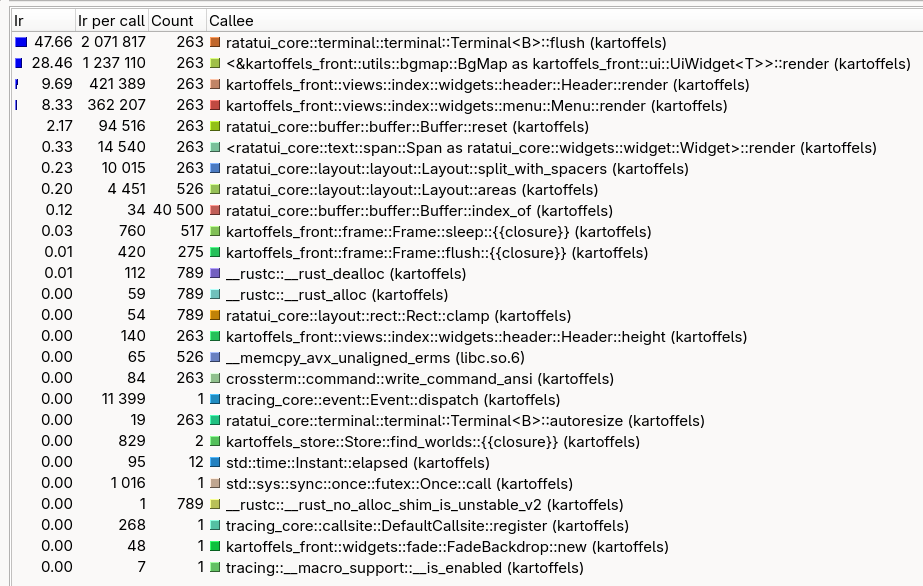

This is KCachegrind, showing timings for a single frame - you can see

that

Terminal::flush() (expanded below) takes about the same

time as everything else, including drawing the frame:

Terminal::flush() where

Buffer::diff()

is seen taking almost all of the time.

but if computers fast then why codec slow ??

Wager

Most of the overhead seems to come from, out of all things, Unicode ✅💻🖥️💻✅ - but I'm getting ahead of myself. When you see a terminal:

... you might imagine it's basically a two-dimensional array of characters:

struct Buffer { cells: Vec<Vec<char>>, }

But this is an oversimplification as each character (also known as cell) can be colored:

struct Buffer { cells: Vec<Vec<Cell>>, } struct Cell { symbol: char, foreground: Color, background: Color, /* underline, strike-through etc. */ }

... and even that is too naive - Rust's char can

encode a single Unicode scalar value, while sometimes what you

perceive as one character is actually a couple of chars

bundled together.

For instance 🐕🦺 (service dog) is really two separate emojis, 🐕 (dog) and 🦺 (safety vest).

So if we want to implement a terminal-ui application that supports

Unicode, it must be able to represent cells with potentially multiple

chars:

struct Cell { symbol: String, /* ... */ }

That is the case for Ratatui - and most TUI libraries, I suppose:

/// A buffer cell #[derive(Debug, Clone, Eq, PartialEq, Hash)] #[cfg_attr(feature = "serde", derive(serde::Serialize, serde::Deserialize))] pub struct Cell { /// The string to be drawn in the cell. /// /// This accepts unicode grapheme clusters which might take up more than one cell. /// /// This is a [`CompactString`] which is a wrapper around [`String`] that uses a small inline /// buffer for short strings. /// /// See <https://github.com/ratatui/ratatui/pull/601> for more information. symbol: CompactString, /* ... */ }

But doing so causes this inconspicuous bit from before:

pub fn diff(&self, other: &Self) -> Vec<(u16, u16, &Cell)> { /* ... */ for (i, (current, previous)) in buffers { if current != previous { /* ... */ } /* ... */ } /* ... */ }

... to suddenly become heavy, because the CPU might be forced to chase pointers and perform logic that otherwise wouldn't be there - an overhead for each cell, for each frame, for each player.

I've been fiddling with Ratatui internals for a while now and my notes tell me that dropping support for Unicode reduces the frame time by half - but those changes are somewhat un-upstreamable.

While they do help in my specific use case, I'd say that most TUI apps should have support for Unicode - for most apps this "overhead" doesn't matter, because they are run locally, and we're talking about a millisecond, if even; it's negligible.

("overhead" in quotes, since it's not really overhead when your application relies on the Unicode support being there - it would be overhead if the algorithm did something otherwise unnecessary.)

Besides, Unicode or not, the fundamental problem I've got with diffing is that it feels like a self-made problem: we need to diff, because every frame we get a brand new buffer. So what if instead of diffing, the UI just knew how to update the terminal incrementally, without the need to diff?

Consider a clock app:

------------------- | time = 12:00:00 | -------------------

If the time now changes to 12:00:01, why bother redrawing

everything just to get the diff?

So this got me thinking - the diffing, plus the fact that I've got a bit of a beef with Ratatui's layouting method, which, as compared to Swift's:

var body: some View { VStack { Text("Hello") Text("World") } }

... or egui's:

egui::CentralPanel::default().show(ctx, |ui| { ui.label("Hello"); ui.label("World"); });

... has this disadvantage of forcing you to imperatively hand-place the widgets:

let (area, buf) = (frame.area(), frame.buffer_mut()); Paragraph::new("Hello").render(area, buf); Paragraph::new("World") .render(area.offset(Offset { x: 0, y: 1 }), buf); // ^--------------------------------^

Even though most of the time most of the calculations can be

abstracted away using Layout:

let (area, buf) = (frame.area(), frame.buffer_mut()); let [hello_area, world_area] = Layout::vertical([ Constraint::Length(1), Constraint::Length(1), ]) .areas(area); Paragraph::new("Hello").render(hello_area, buf); Paragraph::new("World").render(world_area, buf);

... the awkwardness demon comes back once you have a layout whose size depends on its content - for instance in kartoffels there's this world-selection window:

... whose width relies on the longest world name, height relies on the number of worlds, and position relies on the window size itself (it's centered).

If you start to implement this in Ratatui, you quickly get backed into a corner where you have to calculate the layout before you can draw, but you can't easily calculate the layout until you get to the drawing part:

// Step 1: Center the area horizontally let [_, area, _] = Layout::horizontal([ Constraint::Fill(1), Constraint::Length(/* ughh, expected width? */), Constraint::Fill(1), ]) .areas(area); // Step 2: Center the area vertically let [_, area, _] = Layout::vertical([ Constraint::Fill(1), Constraint::Length(/* ughh, expected height? */), Constraint::Fill(1), ]) .areas(area); /* drawing happens here */

Of course, you clearly can precalculate everything by hand:

let expected_width = // left-right padding: 2 // name of the longest world or the `go back` button: + worlds .iter() .map(|world| { 4 // `[1] `, `[2] ` etc. + world.name.len() }) .max() .unwrap() .max(13); // `[esc] go-back` let expected_height = worlds.len() + 2; // Step 1: Center the area horizontally let [_, area, _] = Layout::horizontal([ Constraint::Fill(1), Constraint::Length(expected_width), Constraint::Fill(1), ]) .areas(area); // Step 2: Center the area vertically let [_, area, _] = Layout::vertical([ Constraint::Fill(1), Constraint::Length(expected_height), Constraint::Fill(1), ]) .areas(area);

... it's just that even if you abstract the widgets, the calculations remain a chore.

So, those were - and still remain - the pain points I have with Ratatui:

- diffing feels "unnecessary" and takes "too much" time,

- laying widgets out feels "like a chore".

What's more, in my specific case:

- I don't care about proper Unicode support,

- I'm fine with better performance at the cost of worse developer experience.

With those in mind and cargo new at my fingertips, would

I be able to come up with a better library?

Wisdom

Turns out - no! But hey, I've learned a thing or two - let me walk you through a couple of decisions I've made and through my thought process.

Wisdom - Widgets

Since I'd like to avoid repainting unchanged bits of the screen, I've decided that my library cannot use Ratatui's approach of "let's run a function that draws everything" - instead, I went with a widget tree.

If you've done a bit of HTML or Swift or Yew or any modern UI library really, you're probably familiar with the concept - instead of describing the interface imperatively, you describe it mostly declaratively, like so:

fn ui() -> impl ui::Node { ui::column() .with(ui::text("Hello")) .with(ui::text("World")) }

In practice this can be implemented with a trait:

pub trait Node { // aka "widget" or "component" fn paint(&mut self, buffer: &mut Buffer, x: u16, y: u16); }

... and a couple of types such as:

pub struct Text { body: String, } impl Text { pub fn set(&mut self, body: impl Into<String>) { self.body = body.into(); } } impl Node for Text { fn paint(&mut self, buffer: &mut Buffer, x: u16, y: u16) { /* draw text to buffer at (x, y) */ } }

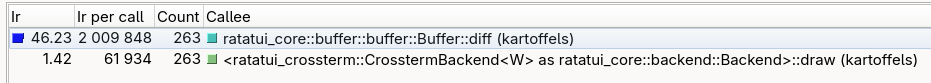

Under this design, rendering every frame consists of painting the root node which then recursively paints its children and so on - for instance this screen from before:

... could be described by this tree (in reality this would be Rust code, of course):

(zstack (background) (centered (window "play" (vstack (button "1" "arena") (button "2" "grotta") (line) (button "esc" "go-back")))))

For convenience, most UI libraries tell you to build a new widget tree every frame - but since we're fine with trading worse developer experience for better performance, we're going to re-use the same tree in-place, just with manually-tracked dirty flags:

pub struct Text { body: String, dirty: bool, } impl Text { pub fn set(&mut self, body: impl Into<String>) { self.body = body.into(); self.dirty = true; } } impl Node for Text { fn paint(&mut self, buffer: &mut Buffer, x: u16, y: u16) { if !self.dirty { // Already drawn return; } /* draw text to buffer at (x, y) */ self.dirty = false; } }

... aaand hey what's that - this is day zero and we're already cheating!

After all, keeping a dirty flag per node is a form of diffing, simply in "node-space" (per each widget) instead of "cell-space" (per each cell).

I think diffing limited to nodes is acceptable, though - it's linear in the number of nodes displayed on the screen, it doesn't depend on the screen size itself. So the "cost" would be 9 for the scene above (9 nodes) instead of, say, 128*64 (width * height) if we were to diff terminal cells.

Now, does it look good on paper? Quite! So let's get to the nasty parts.

Reality check: Events

What about event processing - how can we handle key presses, mouse clicks?

In Ratatui this is painfully obvious, you simply check the keyboard and mouse every frame:

fn should_quit() -> bool { if event::poll(Duration::from_millis(250)).unwrap() { event::read() .unwrap() .as_key_press_event() .is_some_and(|key| key.code == KeyCode::Char('q')) } else { false } }

But the moment you use a widget tree, the control gets inverted - now the ui library has to somehow call you. You can then choose between callbacks:

fn quit_btn(on_press: impl FnOnce()) -> impl ui::Node { ui::button("Press Q to quit") .on_key_pressed('q', on_press) }

... channels:

fn quit_btn(tx: mpsc::Sender<()>) -> impl ui::Node { ui::button("Press Q to quit").on_key_pressed('q', move || { _ = tx.send(()); }) }

... or some sort of pretend-algebraic effects:

enum QuitBtnEvent { Quit, } fn quit_btn() -> impl ui::Node<QuitBtnEvent> { ui::button("Press Q to quit") .on_key_pressed('q', QuitBtnEvent::Quit) }

But none of them compose that well once you have to bundle widgets together:

fn menu( on_start: impl FnOnce(), on_settings: impl FnOnce(), on_quit: impl FnOnce(), ) -> impl ui::Node { ui::column() .with(start_btn(on_start)) .with(settings_btn(on_settings)) .with(quit_btn(on_quit)) }

enum MenuEvent { Start, Settings, Quit, } fn menu() -> impl ui::Node<MenuEffect> { ui::column() .with( start_btn() .map_effect(|_| MenuEffect::Start) ) .with( settings_btn() .map_effect(|_| MenuEffect::Settings) ) .with( quit_btn() .map_effect(|_| MenuEffect::Quit) ) }

Ick.

Using declarative widgets instead of imperative code also spawns a new dimension of problems - state management.

Reality check: State

Say, we're writing a to-do app - how do you pass the state from parent nodes to their children?

fn tasks() -> impl ui::Node { /* okkk, where do i get the list from ?? */ }

Sure, you can pass the list when you create the widget:

fn tasks(tasks: &[Task]) -> impl ui::Node { /* ... */ }

... but since we're not rebuilding the entire widget tree every frame, how do you update it?

enum Event { DeleteSelectedTask, } fn menu() -> impl ui::Node<Event> { /* ... */ } fn tasks<T>(tasks: &[Task]) -> impl ui::Node<T> { /* ... */ } fn main() { let mut tasks = vec![ /* ... */ ]; let ui = Ui::new(); ui.set_root( ui::column() .with(menu()) .with(tasks(&tasks)) ); loop { ui.paint(); match ui.react() { Event::DeleteSelectedTask => { tasks.remove(/* ... */); /* how do you refresh the `tasks()` widget? */ } } } }

I've tried to play with lenses where you extend the node's definition to support arbitrary arguments:

pub trait Node<Arg, Event> { fn paint(&mut self, buffer: &mut Buffer, x: u16, y: u16, arg: &Arg); }

... and then you weave them throughout the call stack:

// Menu doesn't need any arguments, it works for any: fn menu<A>() -> impl ui::Node<A, Event> { /* ... */ } // On the other hand, the task list doesn't throw any effect: fn tasks<E>() -> impl ui::Node<[Task], E> { /* ... */ } fn main() { let mut tasks = vec![ /* ... */ ]; let ui = Ui::new(); ui.set_root( ui::column() .with(menu()) .with(tasks()) ); loop { ui.paint(&tasks); /* ... */ } }

... but I couldn't figure out any design that would work given

anything more complicated than a Hello World! and maybe a

single text input.

A natural approach would be to keep the state and UI materialized separately:

fn task(task: &Task) -> impl ui::Node<Event> { /* title, priority, an "open" button etc. */ } fn main() { // state: let mut tasks = vec![ /* ... */ ]; // user interface: let mut task_nodes = tasks.iter().map(task).collect(); let ui = Ui::new(); ui.set_root( ui::column() .with(menu()) .with(list(task_nodes)) ); /* ... */ }

... but in addition to being memory-heavy, this approach doesn't play with the concept of ownership:

loop { ui.paint(); match ui.react() { Event::DeleteSelectedTask => { tasks.remove(/* ... */); // ugh, `task_nodes` has been moved to `ui.set_root()`, it's not // accessible anymore here: // task_nodes.remove(/* ... */); } } }

My initial demos used an Arc<RwLock<...>>:

fn main() { /* ... */ let task_nodes = tasks.iter().map(task).collect(); let task_nodes = Arc::new(RwLock::new(task_nodes)); /* ... */ ui.set_root( ui::column() .with(menu()) .with(list(task_nodes.clone())) ); loop { ui.paint(); match ui.react() { Event::DeleteSelectedTask => { tasks.remove(/* ... */); task_nodes.write().remove(/* ... */); } } } }

... but that, in turn, means that you have to lock that

RwLock

every frame, also not great.

Cursive does something

else, akin to JavaScript's document.getElementById(),

where you can query a widget for a child:

fn delete_name(s: &mut Cursive) { let mut select = s.find_name::<SelectView<String>>("select").unwrap(); match select.selected_id() { None => s.add_layer(Dialog::info("No name to remove")), Some(focus) => { select.remove_item(focus); } } }

This solves the ownership issue, but it also makes querying for widgets a fallible operation - if you rename a widget but forget to rename references to it, your code will probably panic or display a spurious error message.

What's more, under this design finding widgets takes non-constant time, which could become a performance bottleneck for more complicated views:

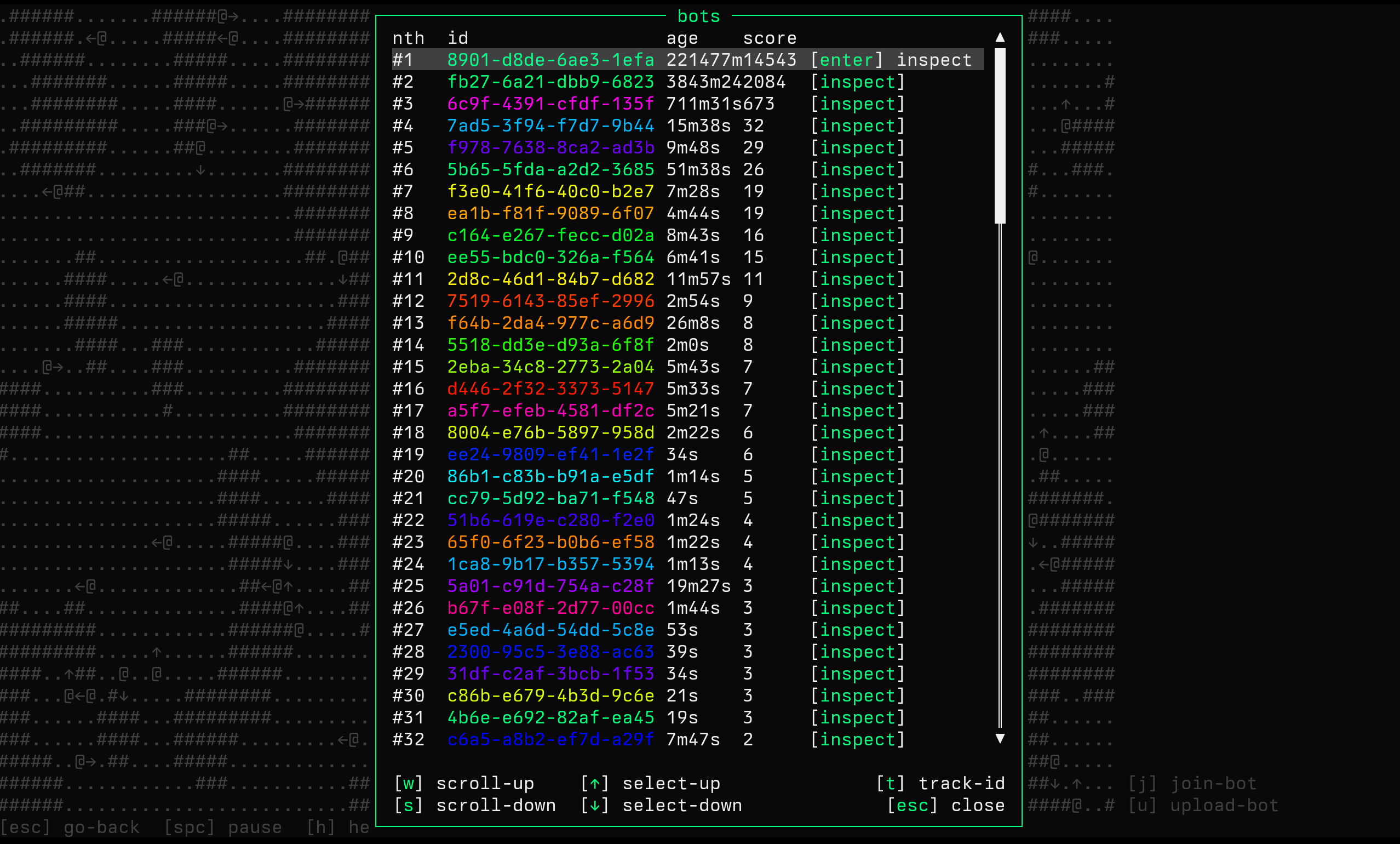

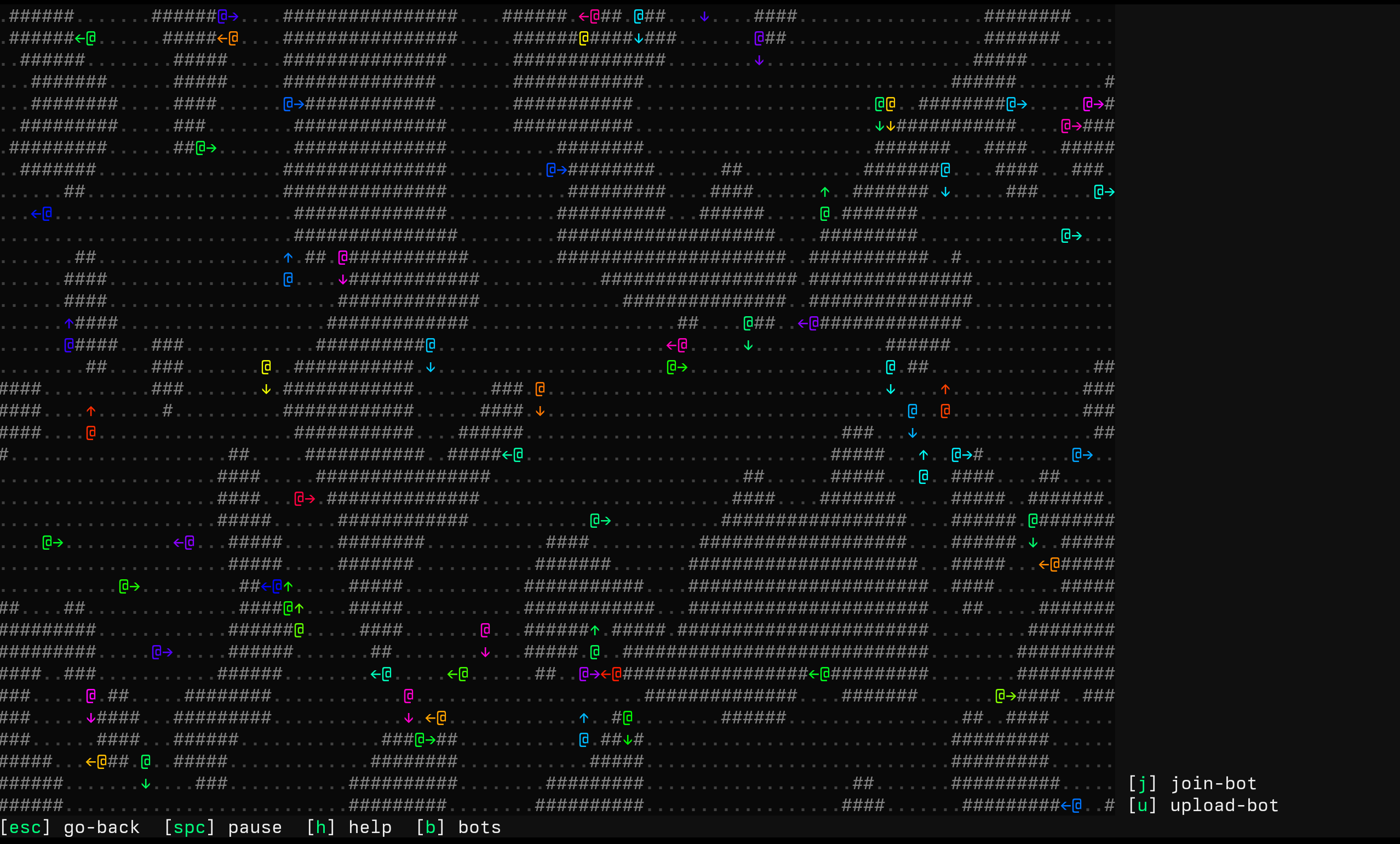

Reality check: Stacking

On the topic of complicated views, another problem I've stumbled upon was z-stacking - consider:

If you now press b, a modal will open and cover the map:

This doesn't pause the game, the map is still getting updated in the background - you can see bots moving and happily stabbing each other behind the window (I mean, not here, since that's just a screenshot, but you know).

In terms of the widget-tree, this is something akin to:

(zstack (map) (backdrop) (bots-dialog (...)))

Now, we'd like to avoid diffing, right? So when

Map::paint() is called, it needs to somehow know

not to overwrite the area that is allocated for

BotsDialog.

This is plenty problematic, because zstack behaves like:

pub struct ZStack { nodes: Vec<Box<dyn Node>>, } impl Node for ZStack { fn paint(&mut self, /* ... */) { for node in &mut self.nodes { node.paint(/* ... */); } } }

... and what we'd need is for Map, the first node on the

list, to somehow know where the next nodes are going to paint

before they are painted.

Let's pretend that we've somehow managed to figure it out, though - another problem is that not all nodes are opaque, some of the nodes behave like shaders, i.e. they just modify whatever is underneath them.

my man that's quite complicated for a tui game, are you sure you

don't "my man" me here, we're all in this together now

Uhm, anyway -- while it sounds spooky, the problem is simple. Compare the previous screenshots:

After a window is opened, there's an extra "grayscale" effect applied to whatever lays "underneath" that window - that's called backdrop.

If you always render an entire frame from scratch, you can simply iterate through all of the cells and replace their foreground color to gray (or do some kind of interpolation if you want to have a fade in effect or something).

But we don't want to generate the entire frame from scratch, we want to be incremental - so the backdrop effect has to somewhat know what cells have changed between the current and the previous frame, like:

impl Node for ZStack { fn paint(&mut self, /* ... */) -> /* ... */ { let mut invalidated_points = HashSet::<(u16, u16)>::new(); for node in &mut self.nodes { let new_invalidated_points = node.paint(/* ... */, &invalidated_points); invalidated.extend(new_invalidated_points); } invalidated_points } }

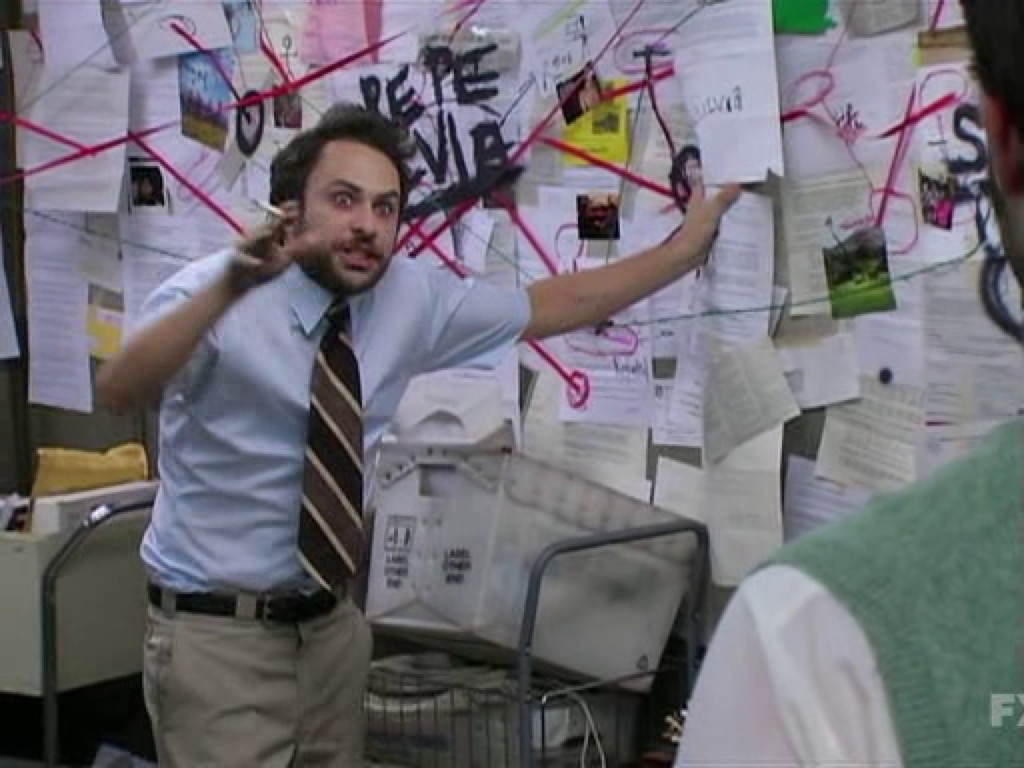

I don't frequently use memes on my blog, but at this point I realized I was just:

So

As you can see, all of the options here somehow suuuck - from event processing up to the incremental updates.

One of the reasons why I've eventually abandoned my custom tui-ui project was that I was getting increasingly worried that the overhead of managing trees and subtrees and selectors and surfaces and whatnot will be greater than any potential gains I could win by avoiding the diffing.

A galactic UI algorithm, if you want.

Wisdom - Layouts

On the upside, there is one decision that turned out quite nice - as I said before, one of the problems I've got with Ratatui is that you have to place the widgets by hand:

Paragraph::new("World") .render(area.offset(Offset { x: 0, y: 1 }), buf); // ^--------------------------------^

... which stands in contrast to, say, Swift:

var body: some View { VStack { Text("Hello") Text("World") } }

While I'm not a Swift developer, I would say that I'm

Swift-aware, so for my library I've decided to

steal borrow a couple of concepts from Swift UI:

-

a single

Textwidget for all-things-text (compared to Ratatui'sSpanvsLinevsParagraph), -

HStack,VStack, andZStackfor all-things-placement, -

ProposedViewSizefor-all-things "how do I make widgets talk to each other".

But first things first.

Fundamentally, the problem with laying things out is that it's a chicken-and-egg game - that's because from a drawing-node's point of view, an ideal rendering interface would be just:

pub trait Node { fn paint(&mut self, buffer: &mut Buffer, x: u16, y: u16); }

But this simplified interface doesn't work with "combinator-nodes" such as:

pub struct Centered<T> { body: T, } impl<T> Node for Centered<T> { fn paint(&mut self, buffer: &mut Buffer, x: u16, y: u16) { /* okk, how do i query `body` for its size? */ } }

let's add a new method, then -

size()!

Sure:

pub trait Node { fn size(&self) -> (u16, u16); fn paint(&mut self, buffer: &mut Buffer, x: u16, y: u16); }

That's cute, but what about nodes that don't inherently have static size? Like a text that wraps - its size depends on the provided width (the narrower you make the node, the longer it's going to be).

This means you need something like:

pub trait Node { fn size(&self, width: u16, height: u16) -> (u16, u16); fn paint(&mut self, buffer: &mut Buffer, x: u16, y: u16); }

But this, in turn, means that most of the time you're going to be walking your widget tree twice - once to size it and then another time to paint it, even if the inner-widget is already of known size.

For instance, if you to had to print a row of non-wrapping text:

| first | second | third |

... then under the size() approach that would require

calling each widget twice:

- first.size() - second.size() - third.size() - first.paint() - second.paint() - third.paint()

... even though all of the widgets have up-front known sizes and even though we're not centering anything - can we do better?

My library's nodes have two main methods, paint() and

mount():

pub trait Node { fn paint(&mut self, buffer: &mut Buffer, pos: Pos) -> Result<Size, RSize>; fn mount(&mut self, size: Size) -> Option<Size>; }

Under this design:

-

when a node has static size (like a box with constant width and height or a text that doesn't wrap), its

paint()always returnsOk(ThatStaticSize), -

when a node needs help with determining its size, its

paint()returnsErrwith a rough constraint (e.g.width must be between 2..50) - parent is then responsible for callingmount()with a proposed size until the child-node accepts the size viamount(), after which the parent callspaint()again.

So you can imagine paint() = Err as communicating to the

parent help, i need to get sized!, which the parent then

handles via mount().

This allows to handle the happy case (e.g. a row of sized widgets)

without having to walk the tree multiple times, at a small "cache

miss" penalty for when the child-node actually needs to get sized (in

which case its

paint() method would get called twice - but the first

time wouldn't draw anything, it would insta-return Err).

(most parents also cache the size of their childen, in which case a

child can make paint() = Err to communicate to the parent

that help, i need to get re-sized!, e.g. after you change

a text.)

Armed with this, I've been able to successfully implement all of the

basic combinators like HStack or VStack, and

the code turned out only mildly spooky:

https://codeberg.org/pwy/kruci/src/branch/main/src/nodes/stack.rs

... and hey, it's tested!

Summary

As I said, I've eventually abandoned the project - for the most part, I've just realized that I need to pick my fights better.

There's no pressure for kartoffels to have hyper-optimized user interface, Ratatui is good enough - as it stands, the game does not have any performance issues. The time I've sacrificed to pursue this user inteface experiment could've been spent better actually improving the game, developing new challenges and whatnot (optimizing Ratatui, even).

Of course, both the game itself and this UI experiment are nothing more than side projects, both are written out of fun - so you well could argue: who cares, why bother being a project manager for yourself? Why bother creating a game on a hard mode, with server-rendered interface?

I guess I'd just like to see far I can get with this idea. Many people wrote to me that being able to just ssh into the game is a nice quality of life thingie, so I'd like to continue with that as the "core design choice" and see what problems unfold.

Sometimes in pursuing a larger goal I find myself lost in a sea of seemingly small, unlit corridors that I feel I need to explore - sometimes I do and it turns out good (migrating from 64-bit RISC-V to 32-bit RISC-V was a good choice), but sometimes you just have to push the brakes and roll back.

I think it's okay to both explore and to give up, other paths await.